The race is on to develop a new generation of voice assistants that can not only understand spoken commands but also perceive and respond to human emotions. Traditional voice assistants like Siri, Alexa, and Google Assistant have revolutionized how we interact with technology, but they fall short in one crucial aspect: emotional intelligence.

Are Siri and Alexa Relics of the Past?

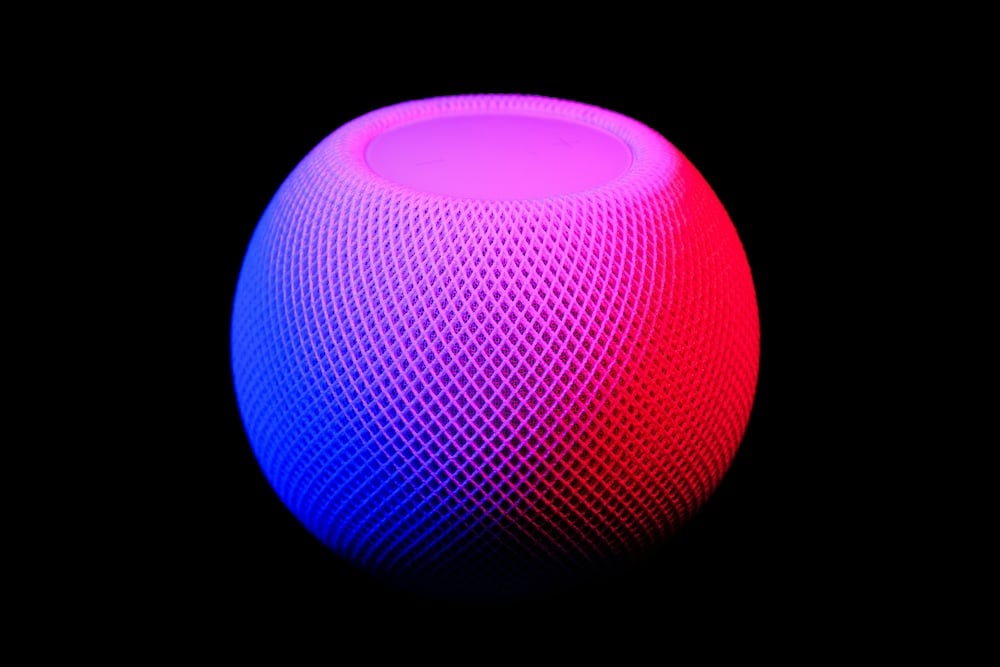

The era of Siri, Alexa, and their ilk may soon draw to a close. These first-generation voice assistants, once heralded as revolutionary breakthroughs, are now at risk of becoming obsolete, unable to keep pace with the rapidly advancing demands of the modern consumer. They have been the go-to voice assistants for completing a wide range of tasks, from setting reminders and playing music to providing weather forecasts and answering trivia questions. However, the shortcomings of these first-generation voice assistants have become increasingly apparent as users become more tech savvy and spoiled for choice.

The future of voice interaction is one where Siri and Alexa may become mere relics, overshadowed by the more sophisticated, empathetic, and trustworthy AI voice assistants that are poised to redefine the way we engage with the digital world.

One of the primary limitations of the current crop of voice assistants is their reliance on a limited set of predefined commands and responses. While they can handle a wide variety of basic queries, they often struggle with more nuanced or open-ended conversations, leaving users feeling frustrated and disconnected. Adding to the pain, the robotic nature of their voices and the occasional misunderstanding of user intent can hinder the seamless, natural interaction that consumers expect.

While Siri, Alexa, and Google Assistant have become household names, their capabilities are still limited to basic tasks like setting reminders, playing music, and providing weather updates. These voice assistants excel at understanding and responding to direct commands, but they lack the ability to comprehend and respond to the emotional context behind those commands.

For example, if you ask Alexa to play a sad song while sounding upset, it will simply play a random sad song without acknowledging or responding to your emotional state. This disconnect between the user’s emotional state and the assistant’s response can lead to frustration and a lack of meaningful interaction.

No matter the age group, everyone seems more discerning about the technology they invite into their homes and lives. The opaque data-gathering practices and potential biases of existing voice assistants have left many consumers wary of their long-term implications, as concerns around privacy, data security, and ethical AI practices continue to stem issues.

The Rise of Emotion-Aware AI Voice Assistants

Recognizing the need for a more advanced, user-centric approach to voice technology, a new breed of AI-powered voice assistants is emerging, poised to revolutionize the way we interact with our digital companions.

These next-generation voice assistants are leveraging the latest advancements in natural language processing, machine learning, and conversational AI to create a more natural, intuitive, and personalized user experience. They are designed to understand context, nuance, and user intent, allowing for more fluid, human-like dialogues that go far beyond the rigid command-and-response structure of their predecessors.

As AI technology continues to advance, researchers and tech companies are working tirelessly to develop voice assistants that can, not only understand spoken words, but also detect and respond to the user’s emotional state. This new generation of emotion-aware AI voice assistants aims to bridge the gap between human and machine interaction, providing a more natural and empathetic experience.

One of the key challenges in developing emotion-aware voice assistants is teaching the AI to recognize and interpret various emotional cues, such as tone of voice, facial expressions, and body language. This requires sophisticated machine learning algorithms and vast datasets of human emotional expressions to train the AI models effectively.

Companies like Affectiva, a spin-off from the Massachusetts Institute of Technology (MIT), are at the forefront of this research. Affectiva’s Emotion AI technology can detect and analyze facial expressions, vocal intonations, and physiological signals to determine a person’s emotional state with remarkable accuracy.

The Benefits of Emotion-Aware Voice Assistants

The potential benefits of emotion-aware voice assistants are vast and far-reaching. In the healthcare sector, for instance, these assistants could help doctors and caregivers better understand and respond to patients’ emotional needs, leading to improved patient care and outcomes.

In the customer service industry, emotion-aware voice assistants could revolutionize the way businesses interact with their customers. By detecting and responding to customer frustration or dissatisfaction, these assistants could provide more personalized and empathetic support, leading to increased customer satisfaction and loyalty.

Moreover, emotion-aware voice assistants could play a crucial role in mental health support and therapy. By recognizing and responding to emotional cues, these assistants could provide a safe and non-judgmental space for individuals to express their feelings and receive appropriate guidance or support.

AI voice assistants that can detect and respond to human emotions have numerous potential applications across various domains:

- Customer Service:

Emotion-aware voice assistants can greatly enhance customer service experiences. By detecting frustration, anger, or dissatisfaction in a customer’s voice, the assistant can adjust its tone, provide empathetic responses, and escalate complex issues to human agents when needed. This can lead to improved customer satisfaction and loyalty. - Mental Health Support:

Voice assistants capable of recognizing emotional states like sadness, anxiety, or distress could play a role in mental health support. They can provide appropriate coping strategies, recommend seeking professional help, or even connect individuals to crisis hotlines in severe cases. - Personalized Recommendations:

By understanding a user’s emotional state, voice assistants can offer personalized recommendations for music, movies, activities, or other content tailored to their current mood or emotional needs. For example, suggesting uplifting or relaxing content when the user is feeling down or stressed. - Education and Learning:

Emotion-aware voice assistants could enhance educational experiences by adapting their teaching style, pace, and content delivery based on a student’s emotional state. If a student is frustrated or disengaged, the assistant could adjust its approach to improve engagement and learning outcomes. - Elderly Care and Companionship:

For elderly individuals living alone, emotion-detecting voice assistants could provide companionship and emotional support. By recognizing loneliness, sadness, or confusion, the assistant could offer appropriate responses, reminders, or even alert caregivers if necessary. - Marketing and Advertising:

By analyzing emotional responses to marketing campaigns, advertisements, or product experiences, emotion-aware voice assistants could provide valuable insights for companies to refine their strategies and messaging to better resonate with their target audience. - Human-Computer Interaction:

Emotion-aware voice assistants can enhance human-computer interaction by making it more natural and intuitive. By understanding emotional cues, the assistant can adjust its responses, tone, and behavior to create a more personalized and empathetic experience for the user.

While the integration of emotion detection capabilities into voice assistants presents exciting opportunities, it also raises important ethical considerations around privacy, data security, and potential misuse of emotional data. Addressing these concerns through robust ethical guidelines and regulations will be crucial as this technology continues to evolve.

Emotion AI technology can detect and analyze facial expressions, vocal intonations, and physiological signals to determine a person’s emotional state with remarkable accuracy.

Companies and technologies working on emotion-aware voice assistants include:

- Affectiva: This company’s Emotion AI technology can detect and analyze facial expressions, vocal intonations, and physiological signals to determine a person’s emotional state.

- Kairos: Kairos offers emotion recognition solutions that can identify emotions like joy, sadness, anger, contempt, disgust, fear, and surprise from facial expressions and speech.

- audEERING: audEERING has developed a demo called ERA (Emotionally Responsive Assistant) that integrates Voice AI into conversational AI agents, allowing them to perceive and respond to the user’s emotional expression.

- Nvidia’s Project Maxine: While not a voice assistant per se, Nvidia’s Maxine project showcases the potential of creating photorealistic AI avatars that can analyze and respond to emotional cues.

While still an emerging field, the integration of emotion detection capabilities into voice assistants presents exciting opportunities for more natural, empathetic, and personalized human-computer interactions across various applications.

The Road Ahead: Challenges and Ethical Considerations

As we bid farewell to the limitations of traditional voice assistants like Siri and Alexa, the race is on to develop a new generation of emotion-aware AI voice assistants that can truly understand and respond to human emotions.

While the development of emotion-aware voice assistants is an exciting prospect, it also raises important ethical and privacy concerns. As these assistants become more adept at recognizing and responding to emotional cues, there is a risk of data privacy violations and potential misuse of personal emotional data. There are concerns about the potential for emotional manipulation or exploitation by these assistants, particularly in vulnerable populations such as children or individuals with mental health conditions.

To address these concerns, it is crucial for researchers, developers, and policymakers to establish clear ethical guidelines and regulations surrounding the development and deployment of emotion-aware AI technologies. This includes ensuring data privacy, transparency, and accountability in the design and implementation of these systems.

Ultimately, the success of emotion-aware voice assistants will depend on striking the right balance between technological advancement and ethical responsibility, ensuring that these powerful AI systems are developed and deployed in a way that benefits humanity while respecting individual privacy and autonomy.

This is a big deal because the race for a new-age AI voice companion has only just begun. The company or institution that can best leverage the latest advancements in artificial intelligence, natural language processing, and user-centric design will emerge as the champion, shaping the future of voice technology and, perhaps, the very way we live our lives.

ZMSEND.com is a technology consultancy firm for design and custom code projects, with fixed monthly plans and 24/7 worldwide support.